How and When the CAHSS Community Can Use ChatGPT Responsibly

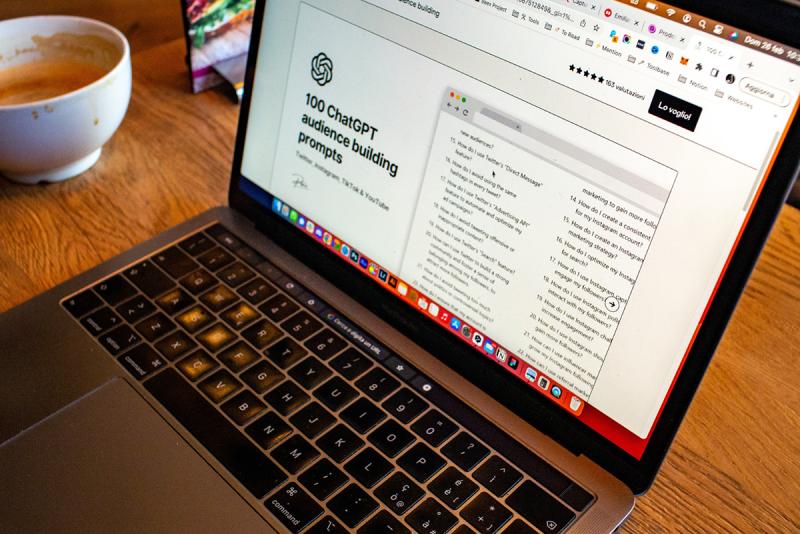

Just months after its release by OpenAI in November 2022, ChatGPT was estimated to have reached 100 million users, making it the fastest growing technology platform and raising new concerns about protecting internet privacy in multiple sectors, including academia.

To address such increasing concerns and continually arising questions about the pros and cons of using ChatGPT for course- and work-related tasks, the College of Arts, Humanities & Social Sciences (CAHSS) hosted a workshop on ChatGPT for CAHSS faculty, staff and graduate students from 12-1:30 p.m. on Thursday, Sept. 21, in Sturm Hall, Room 286.

The event featured a panel discussion, including an interactive activity, with Josh Wilson, chair and professor, Department of Political Science, Juli Parrish, director of the University Writing Center, and Richard Colby, director of the First-Year Writing Program. Parrish and Colby will cover the writing program’s new guidance statements on ChatGPT for students and Wilson will share experiences teaching courses in which students have tried to use ChatGPT to complete their assignments.

Ahead of the workshop, the CAHSS newsroom asked panelists to weigh in on a few key thoughts to consider when faculty, staff, students and alumni use ChatGPT in an academic setting.

ChatGPT provides an interactive, collaborative resource

Conversing with ChatGPT can provide a valuable brainstorming experience for certain writing assignments and help students, faculty and staff consider diverse ideas and better communicate with their targeted audiences.

“The point is to use generative AI (genAI) as an interactive tool,” Colby said. “That means not treating it as a robot to automate tasks for you but as a collaborative resource. Having writers interact with genAI, and then reflect on what sorts of interactive responses genAI is offering, can make apparent how we, as writers, are in constant conversation with ourselves when we write.”

Can compromise the writing/learning process

Using genAI to replace the act of writing replaces the time spent practicing writing skills and the intellectual growth that comes from actually doing the thinking and writing, according to Colby.

“Writing asks us to process and translate ideas from the individual to the social, and then re-translate it back,” he said.

According to Parish, one of the Writing Center’s greatest challenges right now “is figuring out how to use generative AI to promote, and not stand in for, learning. Learning to write meaningful and effective prompts for generative AI is one available pathway for that.”

Illegitimate use is detectable and carries consequences

Wilson has spotted striking, telltale similarities in the substance of the papers he assigned students over the summer that relied on ChatGPT.

Longer papers tended to “not go anywhere substantively,” he said. “That is, they would have paragraphs that would introduce an idea, but they would largely repeat the one idea as opposed to progressing in a linear and constructive fashion.”

Such papers also lacked detail and often failed to reference source materials, he said.

As for consequences, when detecting dishonesty, it’s up to individual faculty members to decide how to respond to violations involving the use of ChatGPT.

“In my case, I am very clear that I will not tolerate academic dishonesty (i.e., plagiarism, cheating or otherwise engaging in efforts to undermine honestly participating in the learning/scholarly process),” Wilson said. “I am now clearer in specifying that I consider using generative AI to produce materials that are supposed to develop and demonstrate intellectual engagement as acts of academic dishonesty.”

In the cases that Wilson discovered over the summer, he notified the students involved that they had earned failing grades on the assignments and in the class, and worked with the Office of Student Rights & Responsibilities to determine how to proceed.

When it comes to using AI for course assignments, Parrish added that “generative AI seems to offer some options that both students and faculty might find helpful; for example, to help a writer figure out the shape of an unfamiliar genre or gain more consistency in a citations list,” she said. “However, our consultants will be working with writers to honor the policy in place in their particular class or writing situation.”

ChatGPT use by faculty and staff can jeopardize data privacy

According to Colby, universities are subject to the same workplace drawbacks of using ChatGPT as industry — sharing confidential information with AI systems can risk jeopardizing privacy and an organization’s sensitive proprietary data.

Colby said “the future of genAI is proprietary language models” as opposed to Large Language Models (LLMs) that are trained on large datasets.

“That might mean training genAI on specific proprietary datasets or even individual writers,” he said.

For example, “while I doubt anybody is working on this right now, I imagine somebody is going to come along and offer genAI FERPA- (Family Educational Rights and Privacy Act) or HIPPA- (Health Insurance Portability and Accountability Act) compliant language models.”

Today’s students, future leaders and workers need to learn to use GenAI/ChatGPT ethically and responsibly

If educators don’t teach and model ethical and responsible use of GenAI and ChatGPT in their courses, “others will, and then we will be playing the reacting game (or worse, the outlawing game),” Colby said. “In a world where higher education is already seen by some as out-of-touch, we cannot afford to take that stance.”

Educators should teach students not only how to use genAI, but how to interrogate the ethical implications and to build new and better genAIs designed for effective and equitable use, according to Colby.

He added that LLMs tend to reinforce biases. Teaching students to be aware of the possibility of biases and question their validity is key.

“By having students not only interrogate these biases but find ways to design prompts that avoid such biases and even build models that encourage a diversity of responses, we are preparing students for the future.”

Parrish said “bias in this case refers not just to particular viewpoints or arguments that users might find in an LLM but also to language itself. Some students and faculty might appreciate the relatively ‘correct’ prose that generative AI can produce, but that correctness might come at the cost of individual style or voice or even erase traces of individual writers' complex language use.”

Where OpenAI might be headed with future iterations of ChatGPT

Colby said OpenAI recently released Teaching with AI that reinforces the idea that ChatGPT is not a replacement for a task, but a collaborator. He asserted that “although ChatGPT was trained on mass communication and designed for a generalist rather than a specialist audience, the future of GenAI is toward narrowcast communication [targeting messages toward specific, niche audiences].”